Product Catalog

Browse our selection of datacenter GPUs, servers, and rack systems

7 products

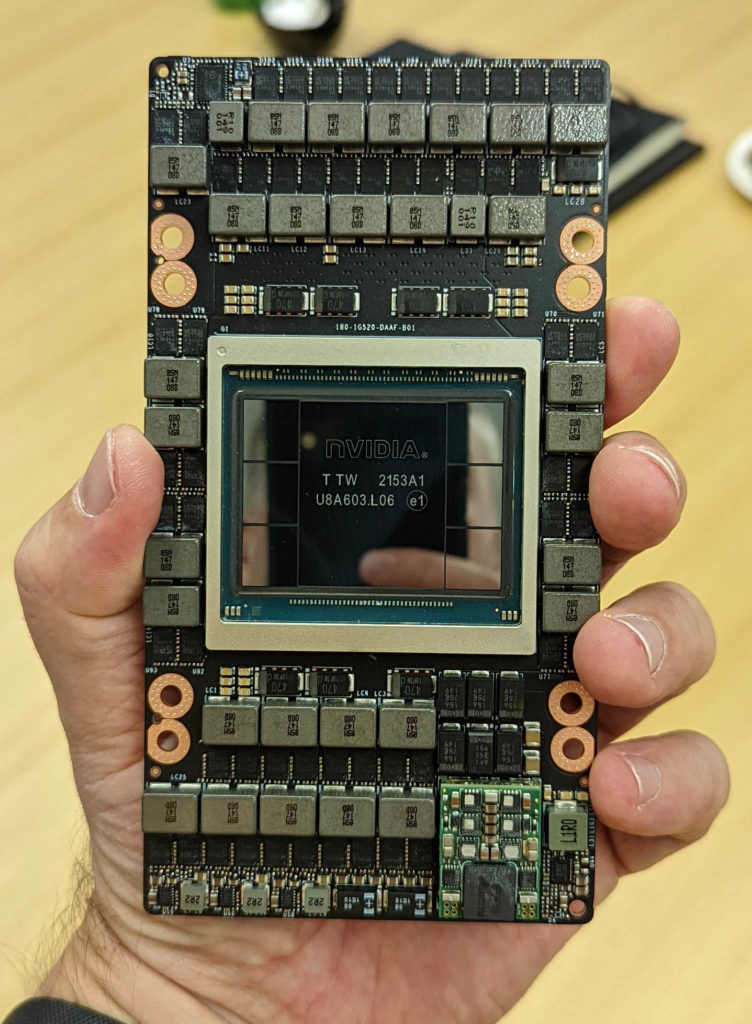

NVIDIA H100 80GB PCIe Gen5

NVIDIA H100 Tensor Core GPU in PCIe form factor with 80GB HBM3 memory. Ideal for deploying AI inference and training in standard servers without NVLink clustering requirements. Contact our sales team for volume pricing and immediate availability.

$29,999

NVIDIA H100 80GB SXM5

NVIDIA H100 Tensor Core GPU in SXM5 form factor with NVLink for multi-GPU scaling. Designed for HGX server platforms and large-scale AI training clusters. Enterprise volume discounts available - contact sales for custom configurations.

$32,999

NVIDIA H200 141GB HBM3e SXM5

Industry-leading Hopper architecture GPU with 141GB HBM3e memory and 4.8TB/s bandwidth. Perfect for large language models, generative AI, and high-performance computing workloads. In stock now - contact us for immediate delivery and competitive pricing.

$39,999

NVIDIA B200 192GB Blackwell

Revolutionary Blackwell architecture with 192GB HBM3e and FP4 precision for next-gen AI. Pre-order now for 2025 delivery - reserve your allocation with our sales team.

$0

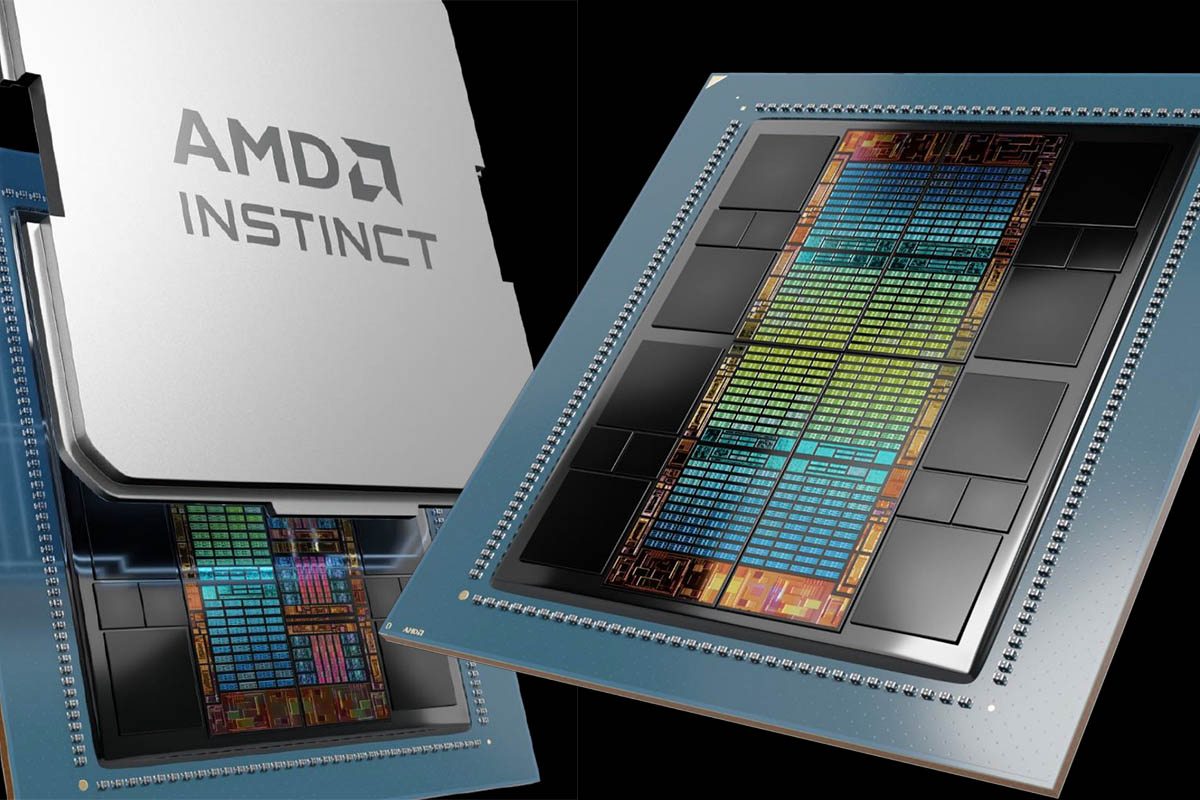

AMD Instinct MI300X 192GB HBM3 OAM

AMD's flagship AI accelerator with industry-leading 192GB HBM3 memory and 5.3TB/s bandwidth. Best-in-class performance per dollar for generative AI and large language models. Available now with competitive pricing and ROCm support.

$29,999

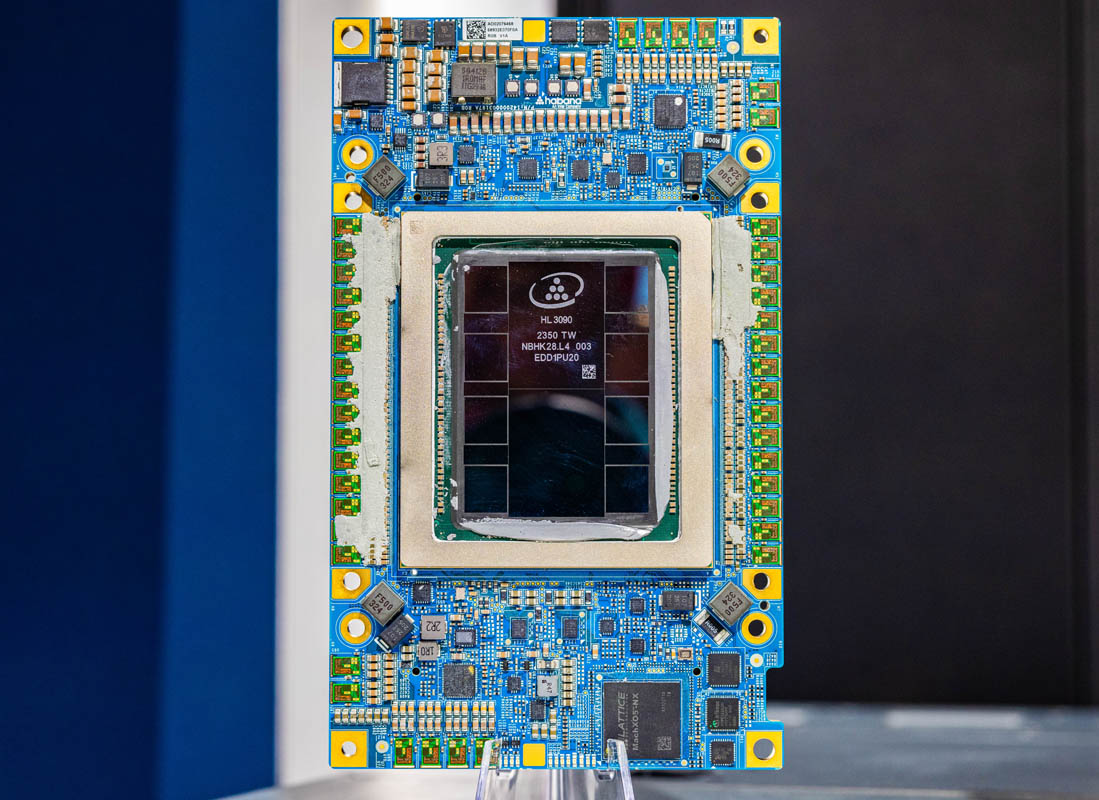

Intel Gaudi 3 AI Accelerator

Intel's third-gen AI accelerator optimized for LLM training and inference. Cost-effective alternative with Ethernet-based scaling. Contact us for evaluation units and pricing.

$0

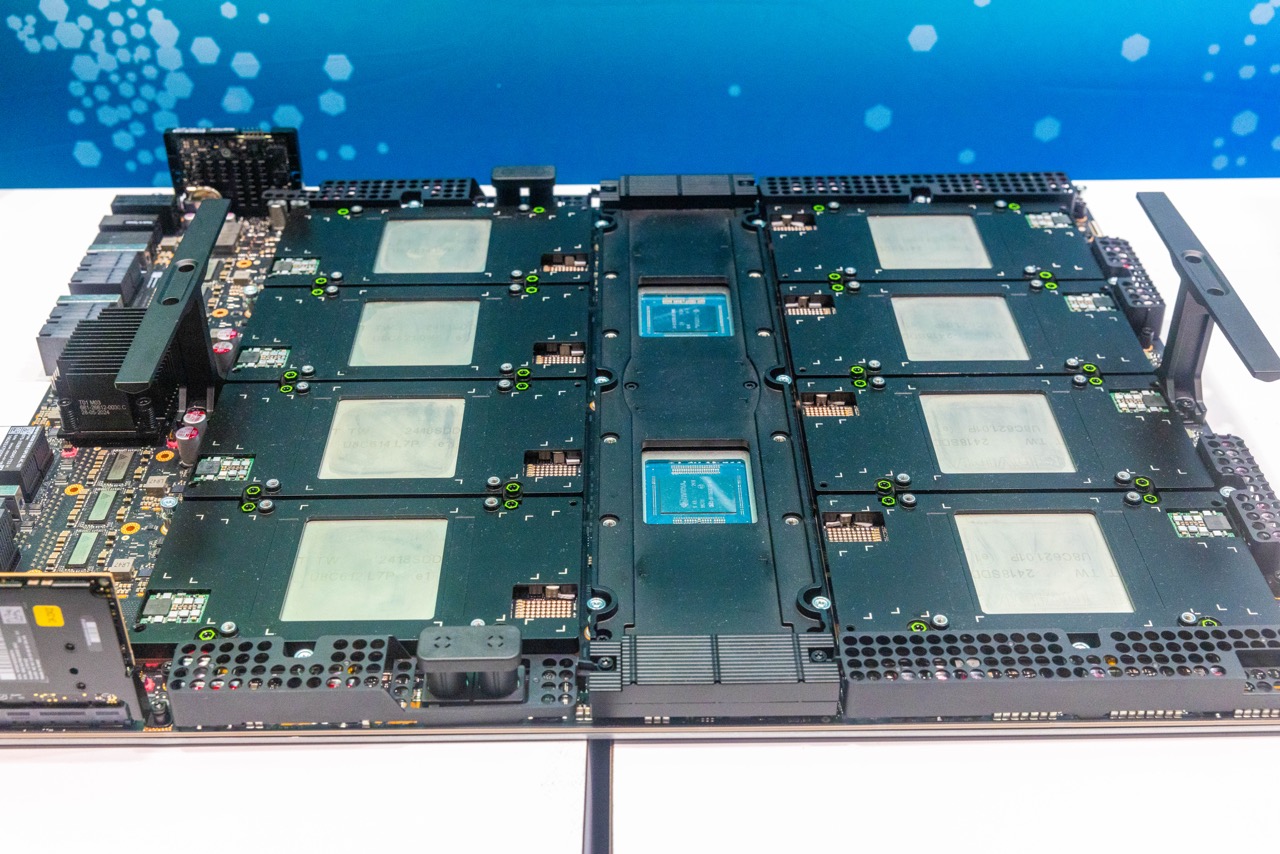

Supermicro 8x NVIDIA H200 AI Training Server - 4U Liquid Cooled

Enterprise-ready 4U server with 8x NVIDIA H200 GPUs (1,128GB total GPU memory), dual Intel Xeon Platinum processors, and 2TB DDR5 RAM. Turnkey solution for large-scale AI training and LLM development. Custom configurations available with white-glove deployment.

$349,999