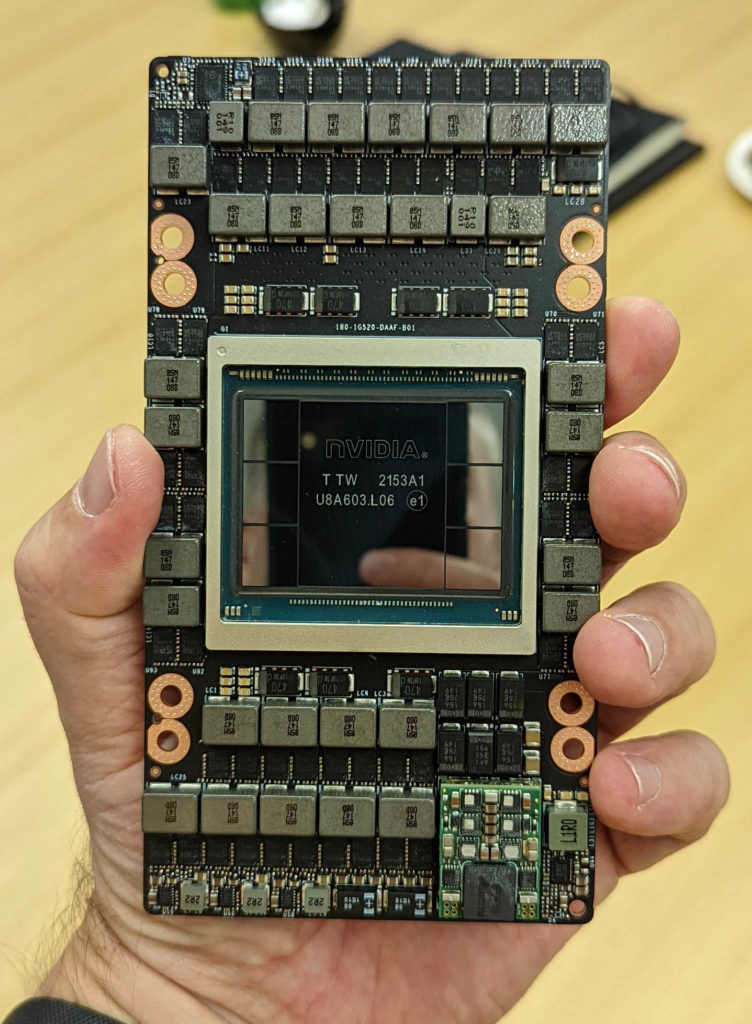

NVIDIA H200 141GB HBM3e SXM5

Industry-leading Hopper architecture GPU with 141GB HBM3e memory and 4.8TB/s bandwidth. Perfect for large language models, generative AI, and high-performance computing workloads. In stock now - contact us for immediate delivery and competitive pricing.

$39,999

$42,000

Ships directly from distributor

What it's for

The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities. As the first GPU with HBM3e memory, H200's faster, larger memory fuels the acceleration of generative AI and large language models (LLMs), while advancing scientific computing for HPC workloads. Get your H200 deployed faster with our white-glove integration service. Call (555) 123-4567 for same-week shipping on qualified orders.

Key Features

- ✓141GB HBM3e memory - nearly 2x the capacity of H100

- ✓4.8 TB/s memory bandwidth - 1.4x faster than H100

- ✓1,979 TFLOPS FP8 performance with Transformer Engine

- ✓Fourth-generation Tensor Cores with FP8 precision

- ✓Second-generation Multi-Instance GPU (MIG) technology

- ✓NVLink for multi-GPU scaling up to 256 GPUs

- ✓Secure Boot and confidential computing support

- ✓Full compatibility with NVIDIA AI Enterprise software

Use Cases

- →Large Language Model (LLM) training and inference

- →Generative AI and foundation model development

- →Recommendation systems at scale

- →Scientific computing and molecular dynamics

- →Computational fluid dynamics (CFD)

- →Weather simulation and climate modeling

Technical Specifications

| Architecture | Hopper |

| GPU Memory | 141 GB HBM3e |

| Memory Bandwidth | 4.8 TB/s |

| FP64 Performance | 60 TFLOPS |

| FP32 Performance | 120 TFLOPS (TF32) |

| FP16 Performance | ~990 TFLOPS |

| FP8 Performance | ~1,979 TFLOPS |

| INT8 Performance | ~3,958 TOPS |

| CUDA Cores | 16,896 |

| Tensor Cores | 528 (4th Gen) |

| NVLink | 900 GB/s (18 links) |

| Max TDP | 700W |

| Thermal Solution | Passive (liquid cooling required) |

| Form Factor | SXM5 |

| PCIe Interface | PCIe Gen5 x16 |

Related Products

NVIDIA H100 80GB PCIe Gen5

NVIDIA H100 Tensor Core GPU in PCIe form factor with 80GB HBM3 memory. Ideal for deploying AI inference and training in standard servers without NVLink clustering requirements. Contact our sales team for volume pricing and immediate availability.

$29,999

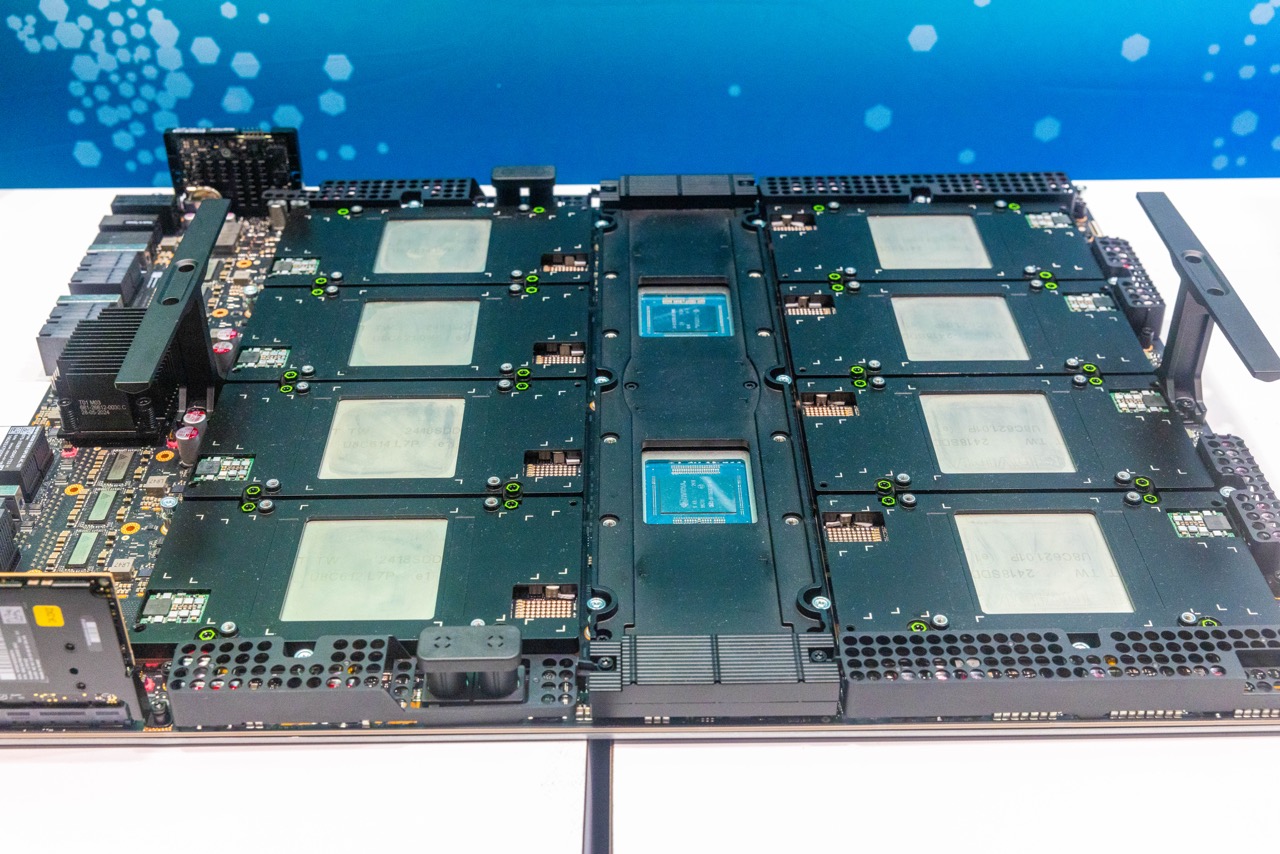

NVIDIA H100 80GB SXM5

NVIDIA H100 Tensor Core GPU in SXM5 form factor with NVLink for multi-GPU scaling. Designed for HGX server platforms and large-scale AI training clusters. Enterprise volume discounts available - contact sales for custom configurations.

$32,999

NVIDIA B200 192GB Blackwell

Revolutionary Blackwell architecture with 192GB HBM3e and FP4 precision for next-gen AI. Pre-order now for 2025 delivery - reserve your allocation with our sales team.

$0

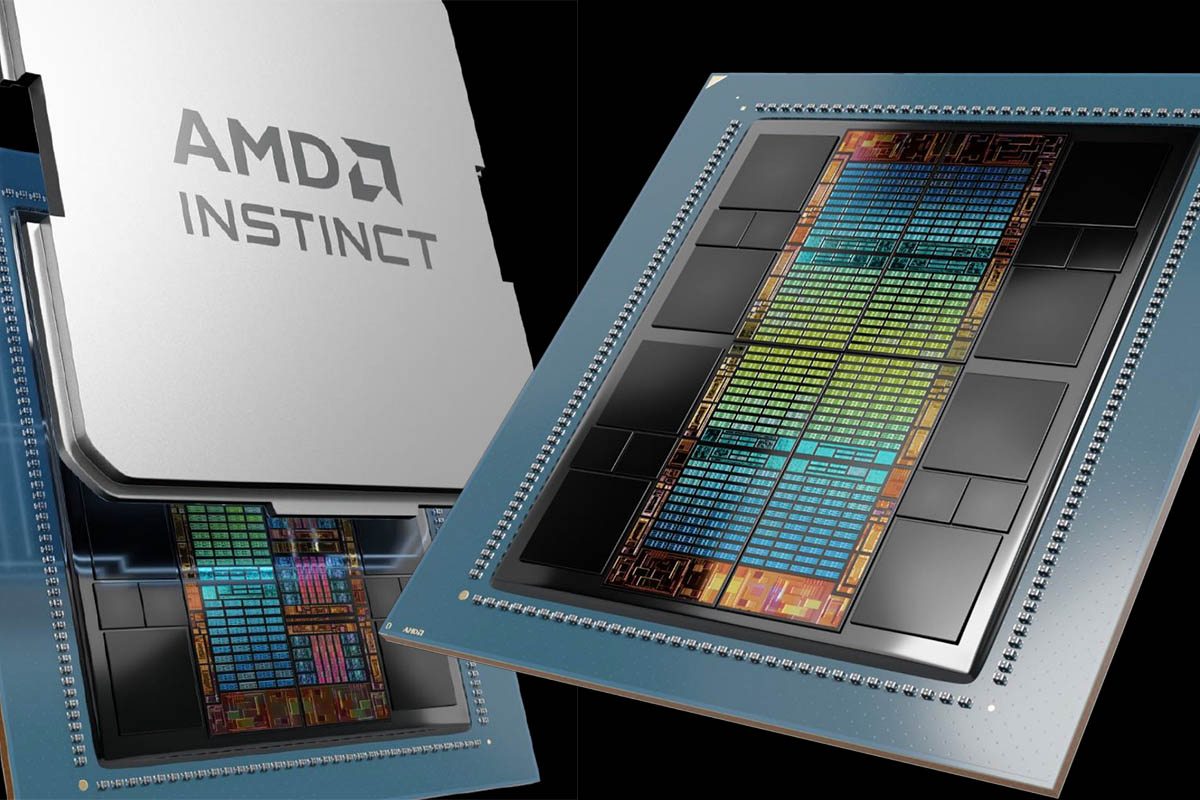

AMD Instinct MI300X 192GB HBM3 OAM

AMD's flagship AI accelerator with industry-leading 192GB HBM3 memory and 5.3TB/s bandwidth. Best-in-class performance per dollar for generative AI and large language models. Available now with competitive pricing and ROCm support.

$29,999