NVIDIA B200 192GB Blackwell

Revolutionary Blackwell architecture with 192GB HBM3e and FP4 precision for next-gen AI. Pre-order now for 2025 delivery - reserve your allocation with our sales team.

Price Available Upon Request

Contact us for custom pricing and availability

Ships directly from distributor

Price Available Upon Request

This cutting-edge product requires custom configuration and pricing. Contact our team for availability and quotes.

Or call us at (850) 407-7265

What it's for

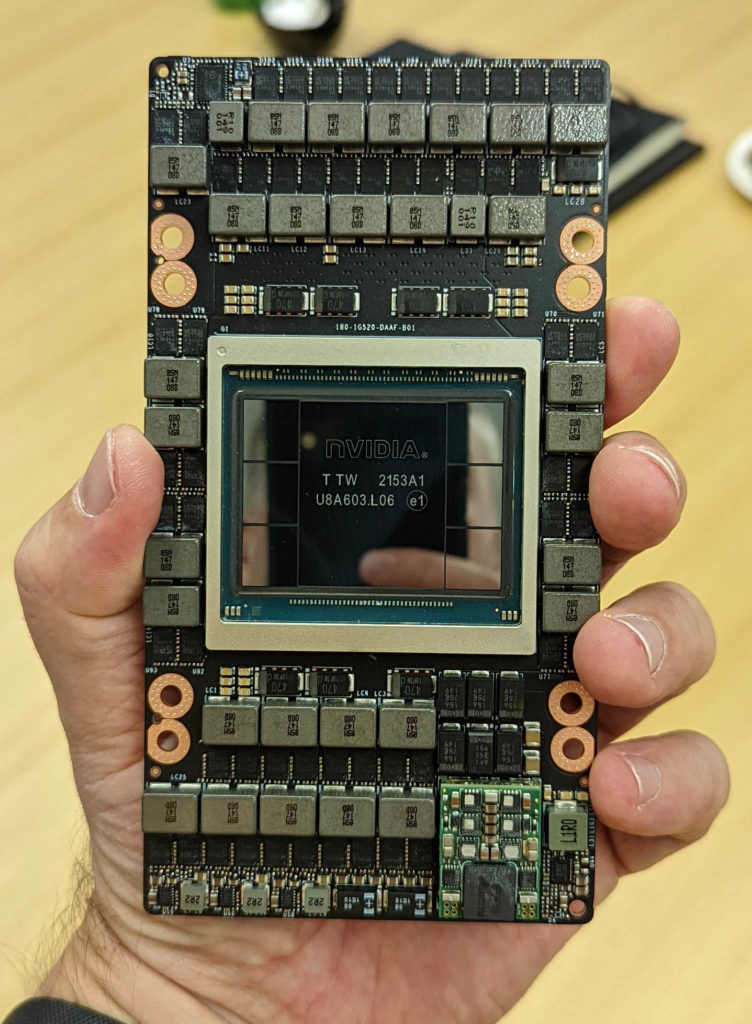

The NVIDIA B200 Tensor Core GPU represents a revolutionary leap in AI computing with the new Blackwell architecture. Featuring 208 billion transistors and a dual-die chiplet design, the B200 delivers 20 petaFLOPS of FP4 AI compute—5x more than H100. With 192GB of ultra-fast HBM3e memory and 8TB/s bandwidth, it's engineered to handle the most demanding foundation models and trillion-parameter AI workloads. Secure your Blackwell allocation today. Limited early access slots available for Q2 2025 delivery. Contact our enterprise team at (555) 123-4567 for priority ordering and deployment planning.

Key Features

- ✓208 billion transistors in dual-die chiplet design - 2.6x more than H100

- ✓20 petaFLOPS FP4 performance - 5x faster than H100 for AI

- ✓192GB HBM3e memory with 8TB/s bandwidth

- ✓Second-generation Transformer Engine with FP4 arithmetic

- ✓Advanced NVLink with 1.8TB/s bi-directional throughput

- ✓TSMC N4P process node for maximum efficiency

- ✓Fifth-generation Tensor Cores with multi-precision support

- ✓Designed for trillion-parameter model training and inference

Use Cases

- →Foundation model training (GPT-4 scale and beyond)

- →Trillion-parameter AI model development

- →Real-time generative AI inference at scale

- →Multi-modal AI (text, image, video, audio)

- →Scientific computing and simulation

- →Drug discovery and genomics research

Technical Specifications

| Architecture | Blackwell (5th Gen) |

| GPU Memory | 192 GB HBM3e |

| Memory Bandwidth | 8 TB/s |

| Transistor Count | 208 billion |

| FP4 Performance | 20 petaFLOPS (sparse) |

| FP8 Performance | ~10 petaFLOPS |

| FP16 Performance | ~5 petaFLOPS |

| Tensor Cores | 5th Generation |

| NVLink Bandwidth | 1.8 TB/s bi-directional |

| Process Node | TSMC N4P |

| Max TDP | 1000W |

| Thermal Solution | Liquid cooling required |

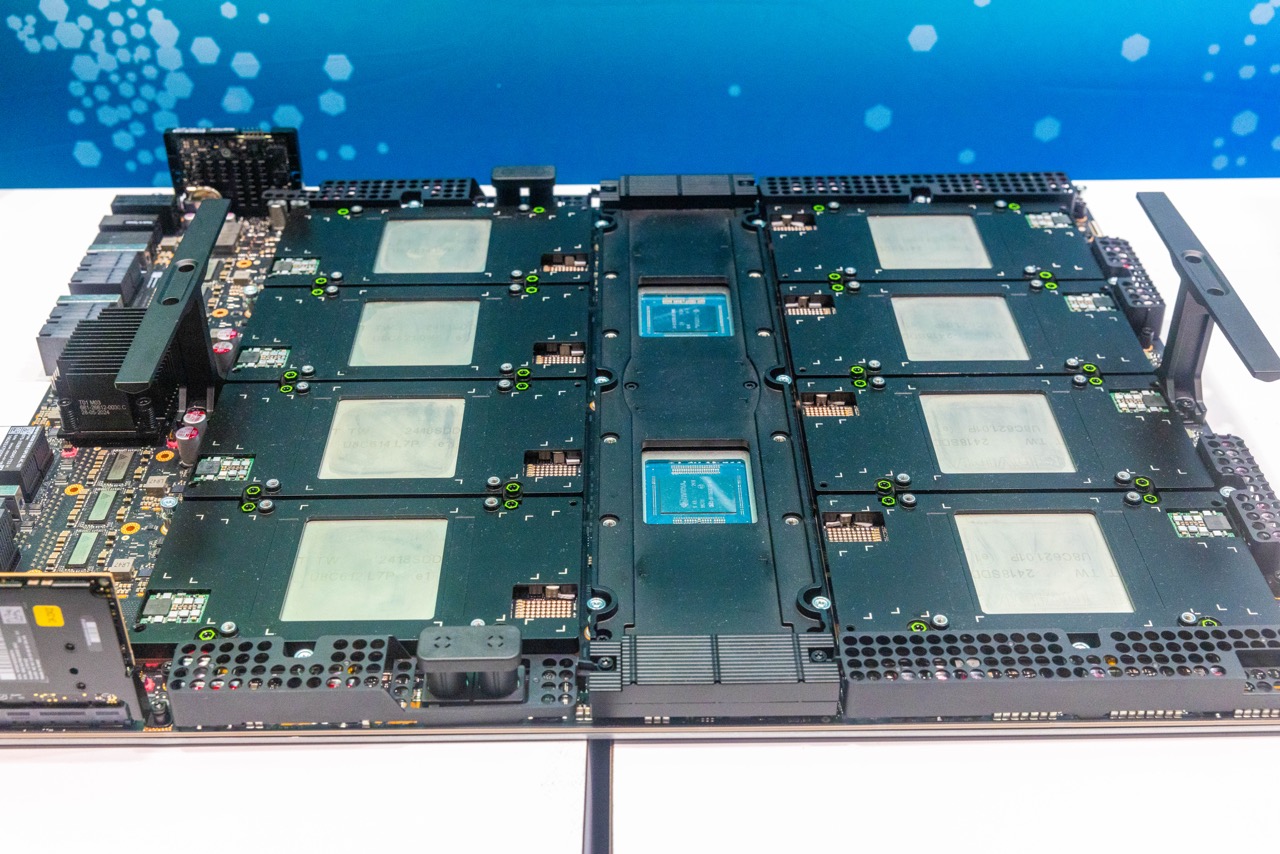

| Form Factor | NVL72 dual-die package |

| Die Configuration | Dual-chip module |

Related Products

NVIDIA H100 80GB PCIe Gen5

NVIDIA H100 Tensor Core GPU in PCIe form factor with 80GB HBM3 memory. Ideal for deploying AI inference and training in standard servers without NVLink clustering requirements. Contact our sales team for volume pricing and immediate availability.

$29,999

NVIDIA H100 80GB SXM5

NVIDIA H100 Tensor Core GPU in SXM5 form factor with NVLink for multi-GPU scaling. Designed for HGX server platforms and large-scale AI training clusters. Enterprise volume discounts available - contact sales for custom configurations.

$32,999

NVIDIA H200 141GB HBM3e SXM5

Industry-leading Hopper architecture GPU with 141GB HBM3e memory and 4.8TB/s bandwidth. Perfect for large language models, generative AI, and high-performance computing workloads. In stock now - contact us for immediate delivery and competitive pricing.

$39,999

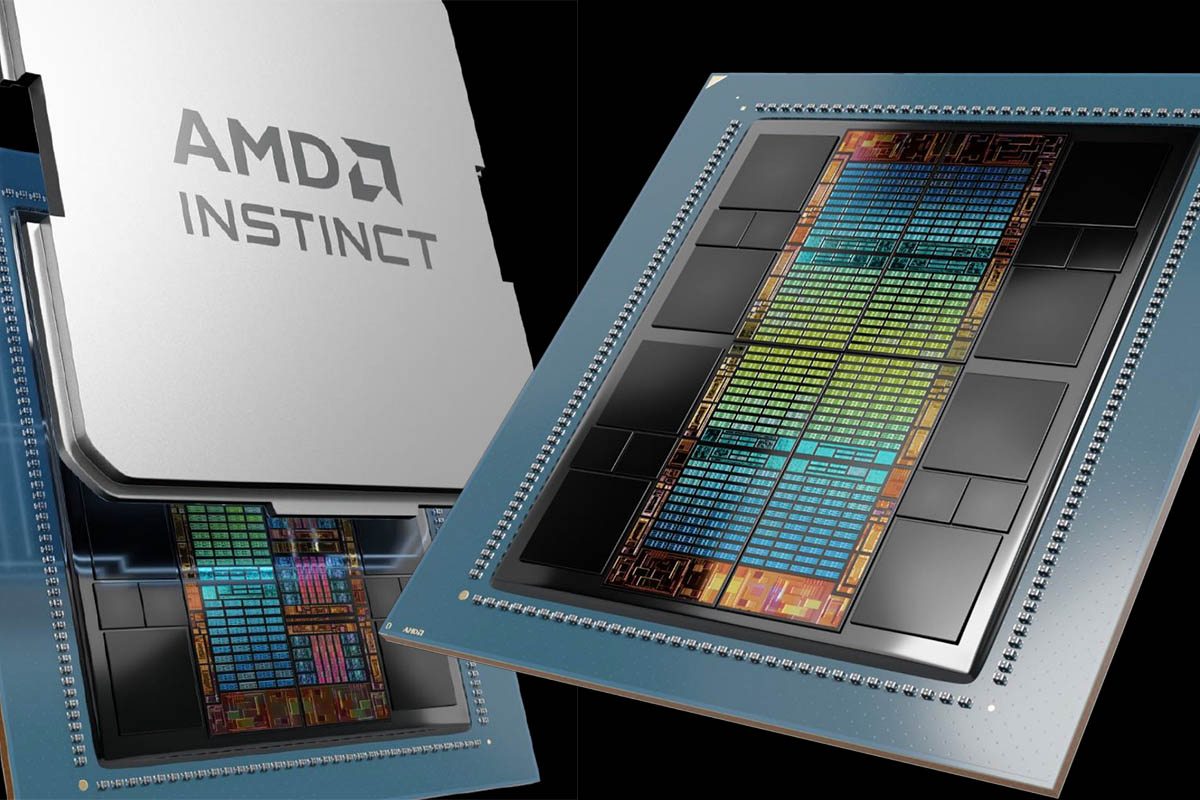

AMD Instinct MI300X 192GB HBM3 OAM

AMD's flagship AI accelerator with industry-leading 192GB HBM3 memory and 5.3TB/s bandwidth. Best-in-class performance per dollar for generative AI and large language models. Available now with competitive pricing and ROCm support.

$29,999